Lessons learned from Lehman Brothers

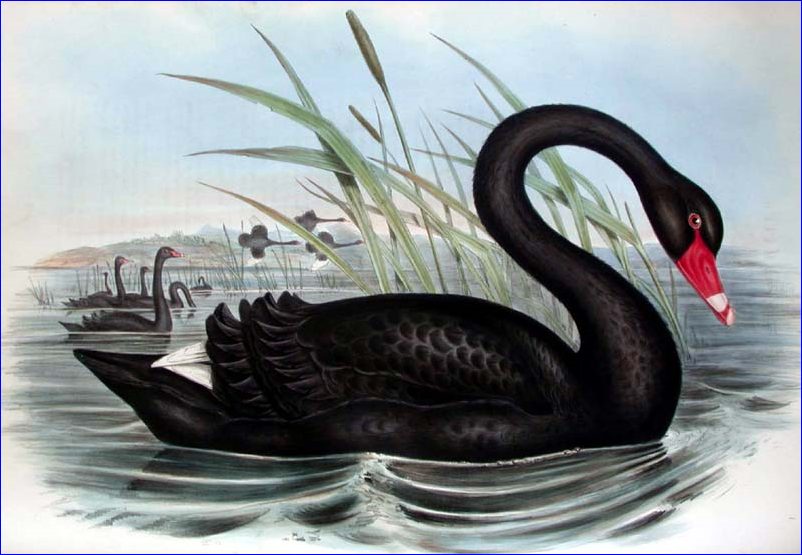

Swans were assumed to be always white, until the discovery of black swans in Australia. Rare, unexpected but highly significant events are much more common than we think.

Lehman Brothers’ former head of compliance recently spoke out for the first time and gave an insider’s perspective on failures at the US investment bank which led to its spectacular collapse in September last year [Ref 1]. David DeMuro cited four reasons for what went wrong at Lehman. There are many parallels between these issues and managing potential major accidents in high hazard industries.

1. MORTGAGES AVAILABLE FOR ALL

DeMuro explained that there had been a great deal of political pressure to increase the availability of mortgages. In the midst of a bubble there is a great deal of money to be made and plain old fashioned greed means high-earning employees are invariably reluctant to voice their concerns over excessive risk taking.

Major hazard industries are also in business to make profits. There are many examples where a culture of production before safety has contributed to major accidents. Sometimes this culture is blatant, but more often mixed messages are given in the form of incompatible goals, such as “maximise production” and “safety is our number one priority”, which if not carefully communicated can leave the workforce unsure as to what takes precedence.

For example, an explosion on 25th September 1998 at the Longford gas plant in Australia killed 2 workers, and injured 8 [Ref 2]. An operator told the inquiry: “I faced a dilemma on the day, standing 20 metres from the explosion and fire, as to whether or not I should activate ESD 1 [shutdown the plant], because I was, for some strange reason, worried about the possible impact on production”. The operator’s dilemma was understandable – the inquiry concluded that the company’s safety culture was more oriented towards preventing lost time rather than protecting workers.

2. OVER-RELIANCE ON RISK MODELS

DeMuro confirmed that there was a huge amount of faith in the financial risk models. Yet most models incorrectly assumed that risks were uncorrelated – when others have argued that rare, unexpected but highly significant events are much more common than we think [Ref 3].

In the high hazard industries, the use of numerical risk models is widespread. Quantitative risk assessment (QRA) can be, and has been, misused, typically in efforts to ‘prove’ that calculated risk levels meet acceptance criteria.

In contrast, the sensible use of QRA is in helping to make better risk-informed decisions rather than blindly believing in a calculated value of risk. In particular, the probabilistic approach of QRA can be extremely useful for considering a broad range of scenarios, especially extreme events.

QRA involves lots of numbers and can appear to be objective when in fact there are many judgements throughout the analysis. It is the role of experienced QRA practitioners to interpret results in the context of the uncertainties inherent within the analysis and to communicate these clearly to decision makers. It is management’s duty to view risk model results as one input to the overall decision-making process. Models are not a substitute for good judgement.

3. IT’S NOT THE REGULATOR’S FAULT

DeMuro explained that as far as regulatory compliance was concerned Lehman had been performing well. He was reluctant to blame the regulators for failing to spot problems before they hit, as many commentators have done.

In the major hazard industries it is the operator’s responsibility, as the ‘duty holder’, to ensure compliance with health, safety and environmental legislation. Furthermore, compliance with prescriptive legislation is the minimum standard required. Indeed, many regulators worldwide have moved to a ‘goal-setting’ regime rather than a prescriptive ‘tick-box’ regime, and require operators to demonstrate control of major hazards via effective management systems and documented safety cases.

The underlying principle is that risks must be reduced to a level that is as low as reasonably practicable (ALARP). Demonstrating compliance is far from easy – it needs to take into account the views and concerns of those stakeholders affected by the decision, and requires the documented consideration of improvement options, both implemented and discounted.

4. MANAGING RISK IN SILOS

DeMuro explained that risk managers tend to operate in silos and report their findings individually rather than collectively. As a result, they may miss the truly dramatic problems lurking just around the corner.

Much has been done in the major hazard industries to better integrate the risk management of health, safety, security, environment and social responsibility. No doubt there is room for improvement – for example at times there can be a disconnection between safety and availability (production) decisions.

CONCLUSION

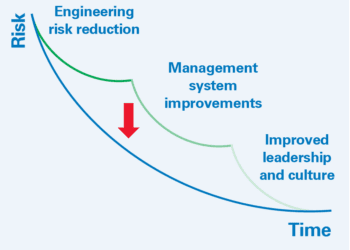

The collapse of Lehman Brothers was considered unthinkable, but it happened. Interestingly, the lessons learned are relevant to high hazard industries, where preventing major accidents requires an equivalent approach to understanding and managing risk in engineering design, management controls and organisational culture.

References

1. www.complinet.com/connected/news-and-events/webcasts

2. Lessons From Longford, Hopkins, 2000

3. The Black Swan, Nassim Nicholas Taleb

This article first appeared in RISKworld Issue 16.